In this post, we explore what the purpose of the robots.txt file is and how to maintain a different, custom version of the file on every environment of your Drupal CMS application.

robots.txt File: Introduction

robots.txt is a standard used by websites to communicate with search engines' web crawlers. It's a text file that is placed in the root directory of a website and provides instructions to web robots about which pages or sections of the site should or should not be crawled or indexed.

The file uses a simple syntax to specify rules for different user agents (web robots). For example, it can instruct a search engine bot not to crawl certain directories or pages on the website. The goal is to control how search engines and other bots interact with the site, ensuring that sensitive information or specific sections are not indexed.

It's important to note that while robots.txt can effectively guide well-behaved web crawlers, it's not a foolproof security measure. Malicious bots may not respect the rules specified in the file. If there are pages or content that should not be accessed by the public, additional security measures should be implemented, such as authentication and authorisation mechanisms. As Google mentions: This is used mainly to avoid overloading your site with requests; it is not a mechanism for keeping a web page out of Google. To keep a web page out of Google, block indexing with noindex or password-protect the page.

Here's a very basic example of a `robots.txt` file:

User-agent: *

Disallow: /private/

Disallow: /restricted-page.htmlIn this example:

User-agent: *applies the rules to all web crawlers.Disallow: /private/instructs crawlers not to crawl any pages in the /private/ directory.Disallow: /restricted-page.htmlinstructs crawlers not to crawl a specific page named restricted-page.html.

Robots.txt and Drupal

Drupal comes with its own robots.txt file. The default robots.txt file contents for Drupal 10.1.6 (the version at the time of writing this) look like the following:

#

# robots.txt

#

# This file is to prevent the crawling and indexing of certain parts

# of your site by web crawlers and spiders run by sites like Yahoo!

# and Google. By telling these "robots" where not to go on your site,

# you save bandwidth and server resources.

#

# This file will be ignored unless it is at the root of your host:

# Used: http://example.com/robots.txt

# Ignored: http://example.com/site/robots.txt

#

# For more information about the robots.txt standard, see:

# http://www.robotstxt.org/robotstxt.html

User-agent: *

# CSS, JS, Images

Allow: /core/*.css$

Allow: /core/*.css?

Allow: /core/*.js$

Allow: /core/*.js?

Allow: /core/*.gif

Allow: /core/*.jpg

Allow: /core/*.jpeg

Allow: /core/*.png

Allow: /core/*.svg

Allow: /profiles/*.css$

Allow: /profiles/*.css?

Allow: /profiles/*.js$

Allow: /profiles/*.js?

Allow: /profiles/*.gif

Allow: /profiles/*.jpg

Allow: /profiles/*.jpeg

Allow: /profiles/*.png

Allow: /profiles/*.svg

# Directories

Disallow: /core/

Disallow: /profiles/

# Files

Disallow: /README.txt

Disallow: /web.config

# Paths (clean URLs)

Disallow: /admin/

Disallow: /comment/reply/

Disallow: /filter/tips

Disallow: /node/add/

Disallow: /search/

Disallow: /user/register

Disallow: /user/password

Disallow: /user/login

Disallow: /user/logout

Disallow: /media/oembed

Disallow: /*/media/oembed

# Paths (no clean URLs)

Disallow: /index.php/admin/

Disallow: /index.php/comment/reply/

Disallow: /index.php/filter/tips

Disallow: /index.php/node/add/

Disallow: /index.php/search/

Disallow: /index.php/user/password

Disallow: /index.php/user/register

Disallow: /index.php/user/login

Disallow: /index.php/user/logout

Disallow: /index.php/media/oembed

Disallow: /index.php/*/media/oembedSimilar to the short example we presented above, the file is full of Allow: and Disallow: directives targeting file and directory paths.

But what if we need to customise the default contents of the file and add or remove certain lines?

Customising the Default robots.txt file

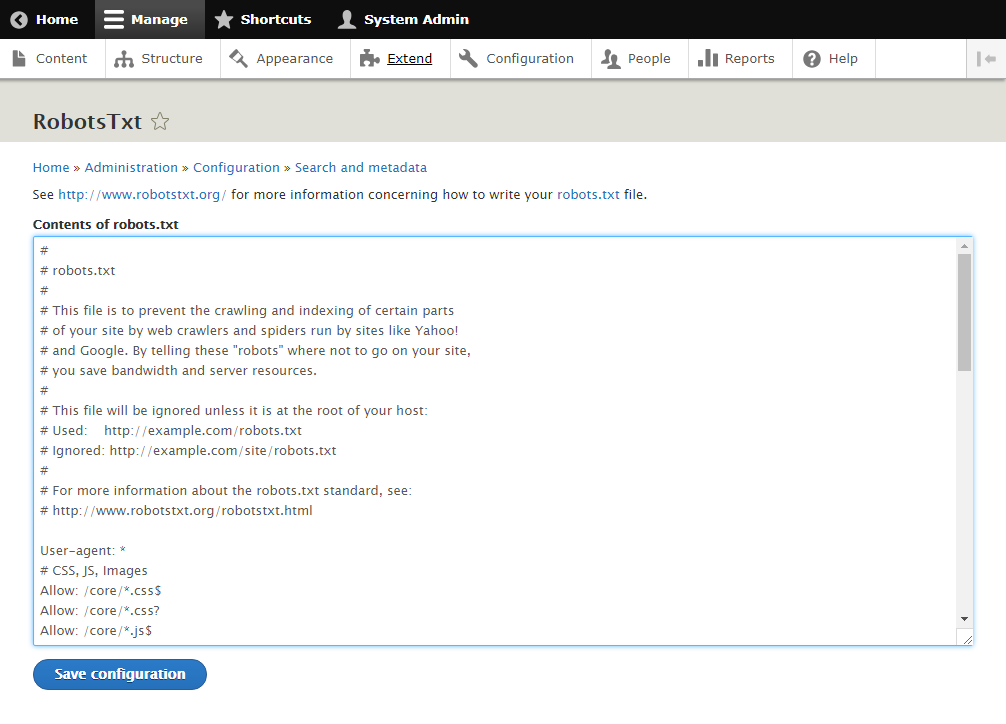

The RobotsTxt module provides the ability to maintain a custom robots.txt file and apply changes to it using the Drupal back office. From the module's page:

Use this module when you are running multiple Drupal sites from a single code base (multisite) and you need a different robots.txt file for each one. This module generates the robots.txt file dynamically and gives you the chance to edit it, on a per-site basis, from the web UI.

Expanding on that, this module is also useful in a single-site scenario where you simply need to maintain your own custom version of robots.txt and not stick to Drupal's default.

To install the module, run the following command on your Terminal/Command Prompt/Shell in your project's root folder i.e. where your composer.json file lives (the latest stable version available might have gone up since the time of writing this post):

composer require 'drupal/robotstxt:^1.5'Then, use Drush to enable the module (or do the same on the UI under /admin/modules):

drush en robotstxtThen, the module's configuration screen should be available under /admin/config/search/robotstxt:

You can now edit the contents of your robots.txt file and save it. But this won't be enough in most cases so read on to understand more about how to make your robots.txt file changes persist through code deployments.

Preventing Drupal from Restoring robots.txt

To allow your custom changes to take effect through the use of the RobotsTxt, you'll have to delete or rename the file in your codebase. But as you'll probably notice next time you run composer install or composer update commands, Drupal keeps regenerating the robots.txt file. To prevent this, you'll have to instruct Drupal accordingly and there are a couple of possible scenarios:

1. If you're using Drupal Scaffold:

According to Drupal documentation:

The Drupal Composer Scaffold project provides a Composer plugin for placing scaffold files (like index.php, update.php, …) from the drupal/core project into their desired location inside the web root. Only individual files may be scaffolded with this plugin.

The purpose of scaffolding files is to allow Drupal sites to be fully managed by Composer, and still allow individual asset files to be placed in arbitrary locations.

If your project is built using Drupal Scaffold, you should have the corresponding section in your composer.json file. In that case, you can add the following to prevent Drupal from regenerating the robots.txt file every time composer builds:

"drupal-scaffold": {

"file-mapping": {

"[web-root]/robots.txt": false

}

},It is possible that there are already items under "drupal-scaffold". If that's the case, when you add the "[web-root]/robots.txt": false bit under "file-mapping", make sure you indent your code lines properly.

2. If you're not using Drupal Scaffold

Composer command events can be really handy for tasks like this. We are going to use the following command events:

- post-install-cmd: Occurs after the install command has been executed with a lock file present.

- post-update-cmd: Occurs after the update command has been executed, or after the install command has been executed without a lock file present.

Both should go into our composer.json file and within the scripts element:

"scripts": {

"post-install-cmd": [

"rm web/robots.txt"

],

"post-update-cmd": [

"rm web/robots.txt"

]

},This way, every time you run composer install or composer update commands, these scripts will remove Drupal's auto-generated robots.txt file.

To make sure that there is a robots.txt file to remove before running the rm command, and also to add a user-friendly message when the rm command runs, you can use a richer version of the above:

"scripts": {

"post-install-cmd": [

"test -e web/robots.txt && rm web/robots.txt || robots.txt file deleted"

],

"post-update-cmd": [

"test -e web/robots.txt && rm web/robots.txt || robots.txt file deleted"

]

},Now we no longer have to deal with Drupal's auto-generated robots.txt file.

Ignoring RobotsTxt Module's Configuration

Now that we have a robust way of defining our own robots.txt file, we need to make sure that our changes won't be overwritten every time new code gets deployed to our environment i.e. every time Configuration in code gets imported. To achieve this we'll use the Config Ignore module. From the module's project page:

This module effectively lets you treat configuration as if it was content. It does so by changing the configuration that is about to be imported to the one that exists already on the site and therefore there is no difference that the configuration import would need to update.

This means that, every time we import Configuration on our site (locally or as part of a deployment workflow on a hosting environment), we can force Drupal to skip any Configuration items we want it to ignore. That way, the active Configuration we have in place won't get overwritten.

To install the module, run the following command on your Terminal (the latest stable version available might have gone up since the time of writing this post):

composer require 'drupal/config_ignore:^3.1'Then, use Drush to enable the module (or do the same on the UI under /admin/modules):

drush en config_ignoreThen, add robotstxt.settings to the list of ignored configurations under admin/config/development/configuration/ignore and save the form.

Finally, export the Configuration with drush:

drush cexThe last command will put all the Configuration items i.e. enabling the two modules and their respective Configurations in our codebase. Then, we need to commit and push these code changes to our remote repository:

git add -Agit commit -m "Adding Composer commands to remove auto-generated robots.txt file and enabling and configuring Robots.Txt and Config Ignore modules"git push origin {BRANCH_NAME}You can replace {BRANCH_NAME} with the branch you're targetting e.g. develop / master etc., depending on your workflow.

You've made it! Now you can have a different robots.txt file on every environment your Drupal application lives on and every time you push/deploy new code, your robots.txt contents won't be overwritten.

ORION WEB can help your business design and build its brand-new website, landing page or microsite, as well as migrate your legacy site to a modern CMS platform. Contact us to discuss your digital strategy!